Introduction

Spock is a testing and specification framework for Java and Groovy applications. What makes it stand out from the crowd is its beautiful and highly expressive specification language. Thanks to its JUnit runner, Spock is compatible with most IDEs, build tools, and continuous integration servers. Spock is inspired from JUnit, jMock, RSpec, Groovy, Scala, Vulcans, and other fascinating life forms.

Getting Started

It’s really easy to get started with Spock. This section shows you how.

Spock Web Console

Spock Web Console is a website that allows you to instantly view, edit, run, and even publish Spock specifications. It is the perfect place to toy around with Spock without making any commitments. So why not run Hello, Spock! right away?

Spock Example Project

To try Spock in your local environment, clone or download/unzip the Spock Example Project. It comes with fully working Ant, Gradle, and Maven builds that require no further setup. The Gradle build even bootstraps Gradle itself and gets you up and running in Eclipse or IDEA with a single command. See the README for detailed instructions.

Spock Primer

This chapter assumes that you have a basic knowledge of Groovy and unit testing. If you are a Java developer but haven’t heard about Groovy, don’t worry - Groovy will feel very familiar to you! In fact, one of Groovy’s main design goals is to be the scripting language alongside Java. So just follow along and consult the Groovy documentation whenever you feel like it.

The goals of this chapter are to teach you enough Spock to write real-world Spock specifications, and to whet your appetite for more.

To learn more about Groovy, go to https://groovy-lang.org/.

To learn more about unit testing, go to https://en.wikipedia.org/wiki/Unit_testing.

Terminology

Let’s start with a few definitions: Spock lets you write specifications that describe expected features (properties, aspects) exhibited by a system of interest. The system of interest could be anything between a single class and a whole application, and is also called the system under specification or SUS. The description of a feature starts from a specific snapshot of the SUS and its collaborators; this snapshot is called the feature’s fixture.

The following sections walk you through all building blocks of which a Spock specification may be composed. A typical specification uses only a subset of them.

Imports

import spock.lang.*Package spock.lang contains the most important types for writing specifications.

Specification

class MyFirstSpecification extends Specification {

// fields

// fixture methods

// feature methods

// helper methods

}A specification is represented as a Groovy class that extends from spock.lang.Specification. The name of a specification

usually relates to the system or system operation described by the specification. For example, CustomerSpec,

H264VideoPlayback, and ASpaceshipAttackedFromTwoSides are all reasonable names for a specification.

Class Specification contains a number of useful methods for writing specifications. Furthermore it instructs JUnit to

run specification with Sputnik, Spock’s JUnit runner. Thanks to Sputnik, Spock specifications can be run by most modern

Java IDEs and build tools.

Fields

def obj = new ClassUnderSpecification()

def coll = new Collaborator()Instance fields are a good place to store objects belonging to the specification’s fixture. It is good practice to

initialize them right at the point of declaration. (Semantically, this is equivalent to initializing them at the very

beginning of the setup() method.) Objects stored into instance fields are not shared between feature methods.

Instead, every feature method gets its own object. This helps to isolate feature methods from each other, which is often

a desirable goal.

@Shared res = new VeryExpensiveResource()Sometimes you need to share an object between feature methods. For example, the object might be very expensive to create,

or you might want your feature methods to interact with each other. To achieve this, declare a @Shared field. Again

it’s best to initialize the field right at the point of declaration. (Semantically, this is equivalent to initializing

the field at the very beginning of the setupSpec() method.)

static final PI = 3.141592654Static fields should only be used for constants. Otherwise, shared fields are preferable, because their semantics with respect to sharing are more well-defined.

Fixture Methods

def setupSpec() {} // runs once - before the first feature method

def setup() {} // runs before every feature method

def cleanup() {} // runs after every feature method

def cleanupSpec() {} // runs once - after the last feature methodFixture methods are responsible for setting up and cleaning up the environment in which feature methods are run.

Usually it’s a good idea to use a fresh fixture for every feature method, which is what the setup() and cleanup() methods are for.

All fixture methods are optional.

Occasionally it makes sense for feature methods to share a fixture, which is achieved by using shared fields together with the setupSpec() and cleanupSpec() methods.

Note that setupSpec() and cleanupSpec() may not reference instance fields unless they are annotated with @Shared.

|

Note

|

There may be only one fixture method of each type per specification class. |

Invocation Order

If fixture methods are overridden in a specification subclass then setup() of the superclass will run before setup() of the subclass.

cleanup() works in reverse order, that is cleanup() of the subclass will execute before cleanup() of the superclass.

setupSpec() and cleanupSpec() behave in the same way.

There is no need to explicitly call super.setup() or super.cleanup() as Spock will automatically find and execute fixture methods at all levels in an inheritance hierarchy.

-

super.setupSpec -

sub.setupSpec -

super.setup -

sub.setup -

feature method

-

sub.cleanup -

super.cleanup -

sub.cleanupSpec -

super.cleanupSpec

Feature Methods

def "pushing an element on the stack"() {

// blocks go here

}Feature methods are the heart of a specification. They describe the features (properties, aspects) that you expect to find in the system under specification. By convention, feature methods are named with String literals. Try to choose good names for your feature methods, and feel free to use any characters you like!

Conceptually, a feature method consists of four phases:

-

Set up the feature’s fixture

-

Provide a stimulus to the system under specification

-

Describe the response expected from the system

-

Clean up the feature’s fixture

Whereas the first and last phases are optional, the stimulus and response phases are always present (except in interacting feature methods), and may occur more than once.

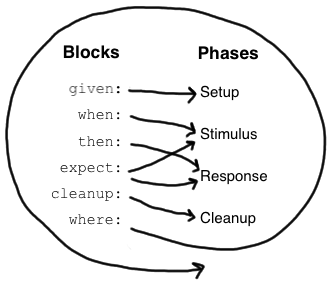

Blocks

Spock has built-in support for implementing each of the conceptual phases of a feature method. To this end, feature

methods are structured into so-called blocks. Blocks start with a label, and extend to the beginning of the next block,

or the end of the method. There are six kinds of blocks: given, when, then, expect, cleanup, and where blocks.

Any statements between the beginning of the method and the first explicit block belong to an implicit given block.

A feature method must have at least one explicit (i.e. labelled) block - in fact, the presence of an explicit block is what makes a method a feature method. Blocks divide a method into distinct sections, and cannot be nested.

The picture on the right shows how blocks map to the conceptual phases of a feature method. The where block has a

special role, which will be revealed shortly. But first, let’s have a closer look at the other blocks.

Given Blocks

given:

def stack = new Stack()

def elem = "push me"The given block is where you do any setup work for the feature that you are describing. It may not be preceded by

other blocks, and may not be repeated. A given block doesn’t have any special semantics. The given: label is

optional and may be omitted, resulting in an implicit given block. Originally, the alias setup: was the preferred block name,

but using given: often leads to a more readable feature method description (see Specifications as Documentation).

When and Then Blocks

when: // stimulus

then: // responseThe when and then blocks always occur together. They describe a stimulus and the expected response. Whereas when

blocks may contain arbitrary code, then blocks are restricted to conditions, exception conditions, interactions,

and variable definitions. A feature method may contain multiple pairs of when-then blocks.

Conditions

Conditions describe an expected state, much like JUnit’s assertions. However, conditions are written as plain boolean expressions, eliminating the need for an assertion API. (More precisely, a condition may also produce a non-boolean value, which will then be evaluated according to Groovy truth.) Let’s see some conditions in action:

when:

stack.push(elem)

then:

!stack.empty

stack.size() == 1

stack.peek() == elem|

Tip

|

Try to keep the number of conditions per feature method small. One to five conditions is a good guideline. If you have more than that, ask yourself if you are specifying multiple unrelated features at once. If the answer is yes, break up the feature method in several smaller ones. If your conditions only differ in their values, consider using a data table. |

What kind of feedback does Spock provide if a condition is violated? Let’s try and change the second condition to

stack.size() == 2. Here is what we get:

Condition not satisfied:

stack.size() == 2

| | |

| 1 false

[push me]As you can see, Spock captures all values produced during the evaluation of a condition, and presents them in an easily digestible form. Nice, isn’t it?

Implicit and explicit conditions

Conditions are an essential ingredient of then blocks and expect blocks. Except for calls to void methods and

expressions classified as interactions, all top-level expressions in these blocks are implicitly treated as conditions.

To use conditions in other places, you need to designate them with Groovy’s assert keyword:

def setup() {

stack = new Stack()

assert stack.empty

}If an explicit condition is violated, it will produce the same nice diagnostic message as an implicit condition.

Exception Conditions

Exception conditions are used to describe that a when block should throw an exception. They are defined using the

thrown() method, passing along the expected exception type. For example, to describe that popping from an empty stack

should throw an EmptyStackException, you could write the following:

when:

stack.pop()

then:

thrown(EmptyStackException)

stack.emptyAs you can see, exception conditions may be followed by other conditions (and even other blocks). This is particularly useful for specifying the expected content of an exception. To access the exception, first bind it to a variable:

when:

stack.pop()

then:

def e = thrown(EmptyStackException)

e.cause == nullAlternatively, you may use a slight variation of the above syntax:

when:

stack.pop()

then:

EmptyStackException e = thrown()

e.cause == nullThis syntax has two small advantages: First, the exception variable is strongly typed, making it easier for IDEs to

offer code completion. Second, the condition reads a bit more like a sentence ("then an EmptyStackException is thrown").

Note that if no exception type is passed to the thrown() method, it is inferred from the variable type on the left-hand

side.

Sometimes we need to convey that an exception should not be thrown. For example, let’s try to express that a HashMap

should accept a null key:

def "HashMap accepts null key"() {

setup:

def map = new HashMap()

map.put(null, "elem")

}This works but doesn’t reveal the intention of the code. Did someone just leave the building before he had finished implementing this method? After all, where are the conditions? Fortunately, we can do better:

def "HashMap accepts null key"() {

given:

def map = new HashMap()

when:

map.put(null, "elem")

then:

notThrown(NullPointerException)

}By using notThrown(), we make it clear that in particular a NullPointerException should not be thrown. (As per the

contract of Map.put(), this would be the right thing to do for a map that doesn’t support null keys.) However,

the method will also fail if any other exception is thrown.

Interactions

Whereas conditions describe an object’s state, interactions describe how objects communicate with each other. Interactions and Interaction based testing are described in a separate chapter, so we only give a quick example here. Suppose we want to describe the flow of events from a publisher to its subscribers. Here is the code:

def "events are published to all subscribers"() {

given:

def subscriber1 = Mock(Subscriber)

def subscriber2 = Mock(Subscriber)

def publisher = new Publisher()

publisher.add(subscriber1)

publisher.add(subscriber2)

when:

publisher.fire("event")

then:

1 * subscriber1.receive("event")

1 * subscriber2.receive("event")

}Expect Blocks

An expect block is more limited than a then block in that it may only contain conditions and variable definitions.

It is useful in situations where it is more natural to describe stimulus and expected response in a single expression.

For example, compare the following two attempts to describe the Math.max() method:

when:

def x = Math.max(1, 2)

then:

x == 2expect:

Math.max(1, 2) == 2Although both snippets are semantically equivalent, the second one is clearly preferable. As a guideline, use when-then

to describe methods with side effects, and expect to describe purely functional methods.

|

Tip

|

Leverage Groovy JDK methods like any() and every()

to create more expressive and succinct conditions.

|

Cleanup Blocks

given:

def file = new File("/some/path")

file.createNewFile()

// ...

cleanup:

file.delete()A cleanup block may only be followed by a where block, and may not be repeated. Like a cleanup method, it is used

to free any resources used by a feature method, and is run even if (a previous part of) the feature method has produced

an exception. As a consequence, a cleanup block must be coded defensively; in the worst case, it must gracefully

handle the situation where the first statement in a feature method has thrown an exception, and all local variables

still have their default values.

|

Tip

|

Groovy’s safe dereference operator (foo?.bar()) simplifies writing defensive code.

|

Object-level specifications usually don’t need a cleanup method, as the only resource they consume is memory, which

is automatically reclaimed by the garbage collector. More coarse-grained specifications, however, might use a cleanup

block to clean up the file system, close a database connection, or shut down a network service.

|

Tip

|

If a specification is designed in such a way that all its feature methods require the same resources, use a

cleanup() method; otherwise, prefer cleanup blocks. The same trade-off applies to setup() methods and given blocks.

|

Where Blocks

A where block always comes last in a method, and may not be repeated. It is used to write data-driven feature methods.

To give you an idea how this is done, have a look at the following example:

def "computing the maximum of two numbers"() {

expect:

Math.max(a, b) == c

where:

a << [5, 3]

b << [1, 9]

c << [5, 9]

}This where block effectively creates two "versions" of the feature method: One where a is 5, b is 1, and c is 5,

and another one where a is 3, b is 9, and c is 9.

Although it is declared last, the where block is evaluated before the feature method containing it runs.

The where block is further explained in the Data Driven Testing chapter.

Helper Methods

Sometimes feature methods grow large and/or contain lots of duplicated code. In such cases it can make sense to introduce one or more helper methods. Two good candidates for helper methods are setup/cleanup logic and complex conditions. Factoring out the former is straightforward, so let’s have a look at conditions:

def "offered PC matches preferred configuration"() {

when:

def pc = shop.buyPc()

then:

pc.vendor == "Sunny"

pc.clockRate >= 2333

pc.ram >= 4096

pc.os == "Linux"

}If you happen to be a computer geek, your preferred PC configuration might be very detailed, or you might want to compare offers from many different shops. Therefore, let’s factor out the conditions:

def "offered PC matches preferred configuration"() {

when:

def pc = shop.buyPc()

then:

matchesPreferredConfiguration(pc)

}

def matchesPreferredConfiguration(pc) {

pc.vendor == "Sunny"

&& pc.clockRate >= 2333

&& pc.ram >= 4096

&& pc.os == "Linux"

}The new helper method matchesPreferredConfiguration() consists of a single boolean expression whose result is returned.

(The return keyword is optional in Groovy.) This is fine except for the way that an inadequate offer is now presented:

Condition not satisfied:

matchesPreferredConfiguration(pc)

| |

false ...Not very helpful. Fortunately, we can do better:

void matchesPreferredConfiguration(pc) {

assert pc.vendor == "Sunny"

assert pc.clockRate >= 2333

assert pc.ram >= 4096

assert pc.os == "Linux"

}When factoring out conditions into a helper method, two points need to be considered: First, implicit conditions must

be turned into explicit conditions with the assert keyword. Second, the helper method must have return type void.

Otherwise, Spock might interpret the return value as a failing condition, which is not what we want.

As expected, the improved helper method tells us exactly what’s wrong:

Condition not satisfied:

assert pc.clockRate >= 2333

| | |

| 1666 false

...A final advice: Although code reuse is generally a good thing, don’t take it too far. Be aware that the use of fixture and helper methods can increase the coupling between feature methods. If you reuse too much or the wrong code, you will end up with specifications that are fragile and hard to evolve.

Using with for expectations

As an alternative to the above helper methods, you can use a with(target, closure) method to interact on the object being verified.

This is especially useful in then and expect blocks.

def "offered PC matches preferred configuration"() {

when:

def pc = shop.buyPc()

then:

with(pc) {

vendor == "Sunny"

clockRate >= 2333

ram >= 406

os == "Linux"

}

}Unlike when you use helper methods, there is no need for explicit assert statements for proper error reporting.

When verifying mocks, a with statement can also cut out verbose verification statements.

def service = Mock(Service) // has start(), stop(), and doWork() methods

def app = new Application(service) // controls the lifecycle of the service

when:

app.run()

then:

with(service) {

1 * start()

1 * doWork()

1 * stop()

}Sometimes an IDE has trouble to determine the type of the target, in that case you can help out by manually specifying the

target type via with(target, type, closure).

Using verifyAll to assert multiple expectations together

Normal expectations fail the test on the first failed assertions. Sometimes it is helpful to collect these failures before failing the test to have more information, this behavior is also known as soft assertions.

The verifyAll method can be used like with,

def "offered PC matches preferred configuration"() {

when:

def pc = shop.buyPc()

then:

verifyAll(pc) {

vendor == "Sunny"

clockRate >= 2333

ram >= 406

os == "Linux"

}

}or it can be used without a target.

expect:

verifyAll {

2 == 2

4 == 4

}Like with you can also optionally define a type hint for the IDE.

Specifications as Documentation

Well-written specifications are a valuable source of information. Especially for higher-level specifications targeting a wider audience than just developers (architects, domain experts, customers, etc.), it makes sense to provide more information in natural language than just the names of specifications and features. Therefore, Spock provides a way to attach textual descriptions to blocks:

given: "open a database connection"

// code goes hereUse the and: label to describe logically different parts of a block:

given: "open a database connection"

// code goes here

and: "seed the customer table"

// code goes here

and: "seed the product table"

// code goes hereAn and: label followed by a description can be inserted at any (top-level) position of a feature method, without

altering the method’s semantics.

In Behavior Driven Development, customer-facing features (called stories) are described in a given-when-then format.

Spock directly supports this style of specification with the given: label:

given: "an empty bank account"

// ...

when: "the account is credited \$10"

// ...

then: "the account's balance is \$10"

// ...Block descriptions are not only present in source code, but are also available to the Spock runtime. Planned usages of block descriptions are enhanced diagnostic messages, and textual reports that are equally understood by all stakeholders.

Extensions

As we have seen, Spock offers lots of functionality for writing specifications. However, there always comes a time when something else is needed. Therefore, Spock provides an interception-based extension mechanism. Extensions are activated by annotations called directives. Currently, Spock ships with the following directives:

@Timeout

|

Sets a timeout for execution of a feature or fixture method. |

@Ignore

|

Ignores any feature method carrying this annotation. |

@IgnoreRest

|

Any feature method carrying this annotation will be executed, all others will be ignored. Useful for quickly running just a single method. |

@FailsWith

|

Expects a feature method to complete abruptly. |

Go to the Extensions chapter to learn how to implement your own directives and extensions.

Comparison to JUnit

Although Spock uses a different terminology, many of its concepts and features are inspired by JUnit. Here is a rough comparison:

| Spock | JUnit |

|---|---|

Specification |

Test class |

|

|

|

|

|

|

|

|

Feature |

Test |

Feature method |

Test method |

Data-driven feature |

Theory |

Condition |

Assertion |

Exception condition |

|

Interaction |

Mock expectation (e.g. in Mockito) |

Data Driven Testing

Oftentimes, it is useful to exercise the same test code multiple times, with varying inputs and expected results. Spock’s data driven testing support makes this a first class feature.

Introduction

Suppose we want to specify the behavior of the Math.max method:

class MathSpec extends Specification {

def "maximum of two numbers"() {

expect:

// exercise math method for a few different inputs

Math.max(1, 3) == 3

Math.max(7, 4) == 7

Math.max(0, 0) == 0

}

}Although this approach is fine in simple cases like this one, it has some potential drawbacks:

-

Code and data are mixed and cannot easily be changed independently

-

Data cannot easily be auto-generated or fetched from external sources

-

In order to exercise the same code multiple times, it either has to be duplicated or extracted into a separate method

-

In case of a failure, it may not be immediately clear which inputs caused the failure

-

Exercising the same code multiple times does not benefit from the same isolation as executing separate methods does

Spock’s data-driven testing support tries to address these concerns. To get started, let’s refactor above code into a data-driven feature method. First, we introduce three method parameters (called data variables) that replace the hard-coded integer values:

class MathSpec extends Specification {

def "maximum of two numbers"(int a, int b, int c) {

expect:

Math.max(a, b) == c

...

}

}We have finished the test logic, but still need to supply the data values to be used. This is done in a where: block,

which always comes at the end of the method. In the simplest (and most common) case, the where: block holds a data table.

Data Tables

Data tables are a convenient way to exercise a feature method with a fixed set of data values:

class MathSpec extends Specification {

def "maximum of two numbers"(int a, int b, int c) {

expect:

Math.max(a, b) == c

where:

a | b | c

1 | 3 | 3

7 | 4 | 7

0 | 0 | 0

}

}The first line of the table, called the table header, declares the data variables. The subsequent lines, called table rows, hold the corresponding values. For each row, the feature method will get executed once; we call this an iteration of the method. If an iteration fails, the remaining iterations will nevertheless be executed. All failures will be reported.

Data tables must have at least two columns. A single-column table can be written as:

where:

a | _

1 | _

7 | _

0 | _A sequence of two or more underscores can be used to split one wide data table into multiple narrower ones.

Without this separator and without any other data variable assignment in between there

is no way to have multiple data tables in one where block, the second table would just

be further iterations of the first table, including the seemingly header row:

where:

a | _

1 | _

7 | _

0 | _

__

b | c

1 | 2

3 | 4

5 | 6This is semantically exactly the same, just as one wider combined data table:

where:

a | b | c

1 | 1 | 2

7 | 3 | 4

0 | 5 | 6The sequence of two or more underscores can be used anywhere in the where block.

It will be ignored everywhere, except for in between two data tables, where it is

used to separate the two data tables. This means that the separator can also be used

as styling element in different ways. It can be used as separator line like shown in

the last example or it can for example be used visually as top border of tables

additionally to its effect of separating them:

where:

_____

a | _

1 | _

7 | _

0 | _

_____

b | c

1 | 2

3 | 4

5 | 6Isolated Execution of Iterations

Iterations are isolated from each other in the same way as separate feature methods. Each iteration gets its own instance

of the specification class, and the setup and cleanup methods will be called before and after each iteration,

respectively.

Sharing of Objects between Iterations

In order to share an object between iterations, it has to be kept in a @Shared or static field.

|

Note

|

Only @Shared and static variables can be accessed from within a where: block.

|

Note that such objects will also be shared with other methods. There is currently no good way to share an object just between iterations of the same method. If you consider this a problem, consider putting each method into a separate spec, all of which can be kept in the same file. This achieves better isolation at the cost of some boilerplate code.

Syntactic Variations

The previous code can be tweaked in a few ways.

First, since the where: block already declares all data variables, the method parameters can be

omitted.[1]

You can also omit some parameters and specify others, for example to have them typed. The order also is not important, data variables are matched by name to the specified method parameters.

Second, inputs and expected outputs can be separated with a double pipe symbol (||) to visually set them apart.

With this, the code becomes:

class MathSpec extends Specification {

def "maximum of two numbers"() {

expect:

Math.max(a, b) == c

where:

a | b || c

1 | 3 || 3

7 | 4 || 7

0 | 0 || 0

}

}Alternatively to using single or double pipes you can also use any amount of semicolons to separate data columns from each other:

class MathSpec extends Specification {

def "maximum of two numbers"() {

expect:

Math.max(a, b) == c

where:

a ; b ;; c

1 ; 3 ;; 3

7 ; 4 ;; 7

0 ; 0 ;; 0

}

}Pipes and semicolons as data column separator can not be mixed within one table. If the column separator changes, this starts a new stand-alone data table:

class MathSpec extends Specification {

def "maximum of two numbers"() {

expect:

Math.max(a, b) == c

Math.max(d, e) == f

where:

a | b || c

1 | 3 || 3

7 | 4 || 7

0 | 0 || 0

d ; e ;; f

1 ; 3 ;; 3

7 ; 4 ;; 7

0 ; 0 ;; 0

}

}Reporting of Failures

Let’s assume that our implementation of the max method has a flaw, and one of the iterations fails:

maximum of two numbers [a: 1, b: 3, c: 3, #0] PASSED maximum of two numbers [a: 7, b: 4, c: 7, #1] FAILED Condition not satisfied: Math.max(a, b) == c | | | | | | | | 7 4 | 7 | 42 false class java.lang.Math maximum of two numbers [a: 0, b: 0, c: 0, #2] PASSED

The obvious question is: Which iteration failed, and what are its data values? In our example, it isn’t hard to figure out that it’s the second iteration (with index 1) that failed even from the rich condition rendering. At other times this can be more difficult or even impossible.[2] In any case, Spock makes it loud and clear which iteration failed, rather than just reporting the failure. Iterations of a feature method are by default unrolled with a rich naming pattern. This pattern can also be configured as documented at Unrolled Iteration Names or the unrolling can be disabled like described in the following section.

Method Uprolling and Unrolling

A method annotated with @Rollup will have its iterations not reported independently but only aggregated within the

feature. This can for example be used if you produce many test cases from calculations or if you use external data

like the contents of a database as test data and do not want the test count to vary:

@Rollup

def "maximum of two numbers"() {

...Note that up- and unrolling has no effect on how the method gets executed; it is only an alternation in reporting. Depending on the execution environment, the output will look something like:

maximum of two numbers FAILED Condition not satisfied: Math.max(a, b) == c | | | | | | | | 7 4 | 7 | 42 false class java.lang.Math

The @Rollup annotation can also be placed on a spec.

This has the same effect as placing it on each data-driven feature method of the spec that does not have an

@Unroll annotation.

Alternatively the configuration file setting unrollByDefault

in the unroll section can be set to false to roll up all features automatically unless

they are annotated with @Unroll or are contained in an @Unrolled spec and thus reinstate the pre Spock 2.0

behavior where this was the default.

unroll {

unrollByDefault false

}It is illegal to annotate a spec or a feature with both the @Unroll and the @Rollup annotation and if detected

this will cause an exception to be thrown.

To summarize:

A feature will be uprolled

-

if the method is annotated with

@Rollup -

if the method is not annotated with

@Unrolland the spec is annotated with@Rollup -

if neither the method nor the spec is annotated with

@Unrolland the configuration optionunroll { unrollByDefault }is set tofalse

A feature will be unrolled

-

if the method is annotated with

@Unroll -

if the method is not annotated with

@Rollupand the spec is annotated with@Unroll -

if neither the method nor the spec is annotated with

@Rollupand the configuration optionunroll { unrollByDefault }is set to its default valuetrue

Data Pipes

Data tables aren’t the only way to supply values to data variables. In fact, a data table is just syntactic sugar for one or more data pipes:

...

where:

a << [1, 7, 0]

b << [3, 4, 0]

c << [3, 7, 0]A data pipe, indicated by the left-shift (<<) operator, connects a data variable to a data provider. The data

provider holds all values for the variable, one per iteration. Any object that Groovy knows how to iterate over can be

used as a data provider. This includes objects of type Collection, String, Iterable, and objects implementing the

Iterable contract. Data providers don’t necessarily have to be the data (as in the case of a Collection);

they can fetch data from external sources like text files, databases and spreadsheets, or generate data randomly.

Data providers are queried for their next value only when needed (before the next iteration).

Multi-Variable Data Pipes

If a data provider returns multiple values per iteration (as an object that Groovy knows how to iterate over), it can be connected to multiple data variables simultaneously. The syntax is somewhat similar to Groovy multi-assignment but uses brackets instead of parentheses on the left-hand side:

@Shared sql = Sql.newInstance("jdbc:h2:mem:", "org.h2.Driver")

def "maximum of two numbers"() {

expect:

Math.max(a, b) == c

where:

[a, b, c] << sql.rows("select a, b, c from maxdata")

}Data values that aren’t of interest can be ignored with an underscore (_):

...

where:

[a, b, _, c] << sql.rows("select * from maxdata")The multi-assignments can even be nested. The following example will generate these iterations:

| a | b | c |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

...

where:

[a, [b, _, c]] << [

['a1', 'a2'].permutations(),

[

['b1', 'd1', 'c1'],

['b2', 'd2', 'c2']

]

].combinations()Named deconstruction of data pipes

Since Spock 2.2, multi variable data pipes can also be deconstructed from maps. This is useful when the data provider returns a map with named keys. Or, if you have long values that don’t fit well into a data-table, then using the maps makes it easier to read.

...

where:

[a, b, c] << [

[

a: 1,

b: 3,

c: 5

],

[

a: 2,

b: 4,

c: 6

]

]You can use named deconstruction with nested data pipes, but only on the innermost nesting level.

...

where:

[a, [b, c]] << [

[1, [b: 3, c: 5]],

[2, [c: 6, b: 4]]

]Data Variable Assignment

A data variable can be directly assigned a value:

...

where:

a = 3

b = Math.random() * 100

c = a > b ? a : bAssignments are re-evaluated for every iteration. As already shown above, the right-hand side of an assignment may refer to other data variables:

...

where:

row << sql.rows("select * from maxdata")

// pick apart columns

a = row.a

b = row.b

c = row.cAccessing Other Data Variables

There are only two possibilities to access one data variable from the calculation of another data variable.

The first possibility are derived data variables like shown in the last section. Every data variable that is defined by a direct assignment can access all previously defined data variables, including the ones defined through data tables or data pipes:

...

where:

a = 3

b = Math.random() * 100

c = a > b ? a : bThe second possibility is to access previous columns within data tables:

...

where:

a | b

3 | a + 1

7 | a + 2

0 | a + 3This also includes columns in previous data tables in the same where block:

...

where:

a | b

3 | a + 1

7 | a + 2

0 | a + 3

and:

c = 1

and:

d | e

a * 2 | b * 2

a * 3 | b * 3

a * 4 | b * 4Multi-Variable Assignment

Like with data pipes, you can also assign to multiple variables in one expression, if you have some object Groovy can iterate over. Unlike with data pipes, the syntax here is identical to standard Groovy multi-assignment syntax:

@Shared sql = Sql.newInstance("jdbc:h2:mem:", "org.h2.Driver")

def "maximum of two numbers multi-assignment"() {

expect:

Math.max(a, b) == c

where:

row << sql.rows("select a, b, c from maxdata")

(a, b, c) = row

}Data values that aren’t of interest can be ignored with an underscore (_):

...

where:

row << sql.rows("select * from maxdata")

(a, b, _, c) = rowCombining Data Tables, Data Pipes, and Variable Assignments

Data tables, data pipes, and variable assignments can be combined as needed:

...

where:

a | b

1 | a + 1

7 | a + 2

0 | a + 3

c << [3, 4, 0]

d = a > c ? a : cType Coercion for Data Variable Values

Data variable values are coerced to the declared parameter type using

type coercion. Due to that custom type conversions can be

provided as extension module or with the help of

the @Use extension on the specification (as it has no effect to the where: block if

applied to a feature).

def "type coercion for data variable values"(Integer i) {

expect:

i instanceof Integer

i == 10

where:

i = "10"

}@Use(CoerceBazToBar)

class Foo extends Specification {

def foo(Bar bar) {

expect:

bar == Bar.FOO

where:

bar = Baz.FOO

}

}

enum Bar { FOO, BAR }

enum Baz { FOO, BAR }

class CoerceBazToBar {

static Bar asType(Baz self, Class<Bar> clazz) {

return Bar.valueOf(self.name())

}

}Number of Iterations

The number of iterations depends on how much data is available. Successive executions of the same method can

yield different numbers of iterations. If a data provider runs out of values sooner than its peers, an exception will occur.

Variable assignments don’t affect the number of iterations. A where: block that only contains assignments yields

exactly one iteration.

Closing of Data Providers

After all iterations have completed, the zero-argument close method is called on all data providers that have

such a method.

Unrolled Iteration Names

By default, the names of unrolled iterations are the name of the feature, plus the data variables and the iteration index. This will always produce unique names and should enable you to identify easily the failing data variable combination.

The example at Reporting of Failures for example shows with maximum of two numbers [a: 7, b: 4, c: 7, #1],

that the second iteration (#1) where the data variables have the values 7, 4 and 7 failed.

With a bit of effort, we can do even better:

def "maximum of #a and #b is #c"() {

...This method name uses placeholders, denoted by a leading hash sign (#), to refer to data variables a, b, and c.

In the output, the placeholders will be replaced with concrete values:

maximum of 1 and 3 is 3 PASSED maximum of 7 and 4 is 7 FAILED Math.max(a, b) == c | | | | | | | | 7 4 | 7 | 42 false class java.lang.Math maximum of 0 and 0 is 0 PASSED

Now we can tell at a glance that the max method failed for inputs 7 and 4.

An unrolled method name is similar to a Groovy GString, except for the following differences:

-

Expressions are denoted with

#instead of$, and there is no equivalent for the${…}syntax. -

Expressions only support property access and zero-arg method calls.

Given a class Person with properties name and age, and a data variable person of type Person, the

following are valid method names:

def "#person is #person.age years old"() { // property access

def "#person.name.toUpperCase()"() { // zero-arg method callNon-string values (like #person above) are converted to Strings according to Groovy semantics.

The following are invalid method names:

def "#person.name.split(' ')[1]" { // cannot have method arguments

def "#person.age / 2" { // cannot use operatorsIf necessary, additional data variables can be introduced to hold more complex expressions:

def "#lastName"() {

...

where:

person << [new Person(age: 14, name: 'Phil Cole')]

lastName = person.name.split(' ')[1]

}Additionally, to the data variables the tokens #featureName and #iterationIndex are supported.

The former does not make much sense inside an actual feature name, but there are two other places

where an unroll-pattern can be defined, where it is more useful.

def "#person is #person.age years old [#iterationIndex]"() {will be reported as

╷

└─ Spock ✔

└─ PersonSpec ✔

└─ #person.name is #person.age years old [#iterationIndex] ✔

├─ Fred is 38 years old [0] ✔

├─ Wilma is 36 years old [1] ✔

└─ Pebbles is 5 years old [2] ✔

Alternatively, to specifying the unroll-pattern as method name, it can be given as parameter

to the @Unroll annotation which takes precedence over the method name:

@Unroll("#featureName[#iterationIndex] (#person.name is #person.age years old)")

def "person age should be calculated properly"() {

// ...will be reported as

╷

└─ Spock ✔

└─ PersonSpec ✔

└─ person age should be calculated properly ✔

├─ person age should be calculated properly[0] (Fred is 38 years old) ✔

├─ person age should be calculated properly[1] (Wilma is 36 years old) ✔

└─ person age should be calculated properly[2] (Pebbles is 5 years old) ✔

The advantage is, that you can have a descriptive method name for the whole feature, while having a separate template for each iteration. Furthermore, the feature method name is not filled with placeholders and thus better readable.

If neither a parameter to the annotation is given, nor the method name contains a #,

the configuration file setting defaultPattern

in the unroll section is inspected. If it is set to a non-null

string, this value is used as unroll-pattern. This could for example be set to

-

#featureNameto have all iterations reported with the same name, or -

#featureName[#iterationIndex]to have a simply indexed iteration name, or -

#iterationNameif you make sure that in each data-driven feature you also set a data variable callediterationNamethat is then used for reporting

Special Tokens

This is the complete list of special tokens:

-

#featureNameis the name of the feature (mostly useful for thedefaultPatternsetting) -

#iterationIndexis the current iteration index -

#dataVariableslists all data variables for this iteration, e.g.x: 1, y: 2, z: 3 -

#dataVariablesWithIndexthe same as#dataVariablesbut with an index at the end, e.g.x: 1, y: 2, z: 3, #0

Configuration

unroll {

defaultPattern '#featureName[#iterationIndex]'

}If none of the three described ways is used to set a custom unroll-pattern, by default

the feature name is used, suffixed with all data variable names and their values and

finally the iteration index, so the result will be for example

my feature [x: 1, y: 2, z: 3, #0].

If there is an error in an unroll expression, for example typo in variable name, exception during evaluation of a property or method in the expression and so on, the test will fail. This is not true for the automatic fall back rendering of the data variables if there is no unroll-pattern set in any way, this will never fail the test, no matter what happens.

The failing of test with errors in the unroll expression can be disabled by setting the

configuration file setting validateExpressions

in the unroll section to false. If this is done and an error happens, the erroneous expression

#foo.bar will be substituted by #Error:foo.bar.

unroll {

validateExpressions false

}Some reporting frameworks, or IDEs support proper tree based reporting. For these cases it might be desirable to omit the feature name from the iteration reporting.

unroll {

includeFeatureNameForIterations false

}With includeFeatureNameForIterations true

╷

└─ Spock ✔

└─ ASpec ✔

└─ really long and informative test name that doesn't have to be repeated ✔

├─ really long and informative test name that doesn't have to be repeated [x: 1, y: a, #0] ✔

├─ really long and informative test name that doesn't have to be repeated [x: 2, y: b, #1] ✔

└─ really long and informative test name that doesn't have to be repeated [x: 3, y: c, #2] ✔

includeFeatureNameForIterations false╷

└─ Spock ✔

└─ ASpec ✔

└─ really long and informative test name that doesn't have to be repeated ✔

├─ x: 1, y: a, #0 ✔

├─ x: 2, y: b, #1 ✔

└─ x: 3, y: c, #2 ✔

|

Note

|

The same can be achieved for individual features by using @Unroll('#dataVariablesWithIndex').

|

Interaction Based Testing

Interaction-based testing is a design and testing technique that emerged in the Extreme Programming (XP) community in the early 2000’s. Focusing on the behavior of objects rather than their state, it explores how the object(s) under specification interact, by way of method calls, with their collaborators.

For example, suppose we have a Publisher that sends messages to its `Subscriber`s:

class Publisher {

List<Subscriber> subscribers = []

int messageCount = 0

void send(String message){

subscribers*.receive(message)

messageCount++

}

}

interface Subscriber {

void receive(String message)

}

class PublisherSpec extends Specification {

Publisher publisher = new Publisher()

}How are we going to test Publisher? With state-based testing, we can verify that the publisher keeps track of its

subscribers. The more interesting question, though, is whether a message sent by the publisher

is received by the subscribers. To answer this question, we need a special implementation of

Subscriber that listens in on the conversation between the publisher and its subscribers. Such an

implementation is called a mock object.

While we could certainly create a mock implementation of Subscriber by hand, writing and maintaining this code

can get unpleasant as the number of methods and complexity of interactions increases. This is where mocking frameworks

come in: They provide a way to describe the expected interactions between an object under specification and its

collaborators, and can generate mock implementations of collaborators that verify these expectations.

The Java world has no shortage of popular and mature mocking frameworks: JMock, EasyMock, Mockito, to name just a few. Although each of these tools can be used together with Spock, we decided to roll our own mocking framework, tightly integrated with Spock’s specification language. This decision was driven by the desire to leverage all of Groovy’s capabilities to make interaction-based tests easier to write, more readable, and ultimately more fun. We hope that by the end of this chapter, you will agree that we have achieved these goals.

Except where indicated, all features of Spock’s mocking framework work both for testing Java and Groovy code.

Creating Mock Objects

Mock objects are created with the MockingApi.Mock() method.[3]

Let’s create two mock subscribers:

def subscriber = Mock(Subscriber)

def subscriber2 = Mock(Subscriber)Alternatively, the following Java-like syntax is supported, which may give better IDE support:

Subscriber subscriber = Mock()

Subscriber subscriber2 = Mock()Here, the mock’s type is inferred from the variable type on the left-hand side of the assignment.

|

Note

|

If the mock’s type is given on the left-hand side of the assignment, it’s permissible (though not required) to omit it on the right-hand side. |

Mock objects literally implement (or, in the case of a class, extend) the type they stand in for. In other

words, in our example subscriber is-a Subscriber. Hence it can be passed to statically typed (Java)

code that expects this type.

Default Behavior of Mock Objects

Initially, mock objects have no behavior. Calling methods on them is allowed but has no effect other than returning

the default value for the method’s return type (false, 0, or null). An exception are the Object.equals,

Object.hashCode, and Object.toString methods, which have the following default behavior: A mock object is only

equal to itself, has a unique hash code, and a string representation that includes the name of the type it represents.

This default behavior is overridable by stubbing the methods, which we will learn about in the Stubbing section.

Injecting Mock Objects into Code Under Specification

After creating the publisher and its subscribers, we need to make the latter known to the former:

class PublisherSpec extends Specification {

Publisher publisher = new Publisher()

Subscriber subscriber = Mock()

Subscriber subscriber2 = Mock()

def setup() {

publisher.subscribers << subscriber // << is a Groovy shorthand for List.add()

publisher.subscribers << subscriber2

}We are now ready to describe the expected interactions between the two parties.

Mocking

Mocking is the act of describing (mandatory) interactions between the object under specification and its collaborators. Here is an example:

def "should send messages to all subscribers"() {

when:

publisher.send("hello")

then:

1 * subscriber.receive("hello")

1 * subscriber2.receive("hello")

}Read out aloud: "When the publisher sends a 'hello' message, then both subscribers should receive that message exactly once."

When this feature method gets run, all invocations on mock objects that occur while executing the

when block will be matched against the interactions described in the then: block. If one of the interactions isn’t

satisfied, a (subclass of) InteractionNotSatisfiedError will be thrown. This verification happens automatically

and does not require any additional code.

Interactions

Let’s take a closer look at the then: block. It contains two interactions, each of which has four distinct

parts: a cardinality, a target constraint, a method constraint, and an argument constraint:

1 * subscriber.receive("hello")

| | | |

| | | argument constraint

| | method constraint

| target constraint

cardinality

Cardinality

The cardinality of an interaction describes how often a method call is expected. It can either be a fixed number or a range:

1 * subscriber.receive("hello") // exactly one call

0 * subscriber.receive("hello") // zero calls

(1..3) * subscriber.receive("hello") // between one and three calls (inclusive)

(1.._) * subscriber.receive("hello") // at least one call

(_..3) * subscriber.receive("hello") // at most three calls

_ * subscriber.receive("hello") // any number of calls, including zero

// (rarely needed; see 'Strict Mocking')Target Constraint

The target constraint of an interaction describes which mock object is expected to receive the method call:

1 * subscriber.receive("hello") // a call to 'subscriber'

1 * _.receive("hello") // a call to any mock objectMethod Constraint

The method constraint of an interaction describes which method is expected to be called:

1 * subscriber.receive("hello") // a method named 'receive'

1 * subscriber./r.*e/("hello") // a method whose name matches the given regular expression

// (here: method name starts with 'r' and ends in 'e')When expecting a call to a getter method, Groovy property syntax can be used instead of method syntax:

1 * subscriber.status // same as: 1 * subscriber.getStatus()When expecting a call to a setter method, only method syntax can be used:

1 * subscriber.setStatus("ok") // NOT: 1 * subscriber.status = "ok"Argument Constraints

The argument constraints of an interaction describe which method arguments are expected:

1 * subscriber.receive("hello") // an argument that is equal to the String "hello"

1 * subscriber.receive(!"hello") // an argument that is unequal to the String "hello"

1 * subscriber.receive() // the empty argument list (would never match in our example)

1 * subscriber.receive(_) // any single argument (including null)

1 * subscriber.receive(*_) // any argument list (including the empty argument list)

1 * subscriber.receive(!null) // any non-null argument

1 * subscriber.receive(_ as String) // any non-null argument that is-a String

1 * subscriber.receive(endsWith("lo")) // an argument matching the given Hamcrest matcher

// a String argument ending with "lo" in this case

1 * subscriber.receive({ it.size() > 3 && it.contains('a') })

// an argument that satisfies the given predicate, meaning that

// code argument constraints need to return true of false

// depending on whether they match or not

// (here: message length is greater than 3 and contains the character a)Argument constraints work as expected for methods with multiple arguments:

1 * process.invoke("ls", "-a", _, !null, { ["abcdefghiklmnopqrstuwx1"].contains(it) })When dealing with vararg methods, vararg syntax can also be used in the corresponding interactions:

interface VarArgSubscriber {

void receive(String... messages)

}

...

subscriber.receive("hello", "goodbye")Equality Constraint

The equality constraint uses groovy equality to check the argument, i.e, argument == constraint. You can use

-

any literal

1 * check('string')/1 * check(1)/1 * check(null), -

a variable

1 * check(var), -

a list or map literal

1 * check([1])/1 * check([foo: 'bar']), -

an object

1 * check(new Person('sam')), -

or the result of a method call

1 * check(person())

as an equality constraint.

Hamcrest Constraint

A variation of the equality constraint, if the constraint object is a Hamcrest matcher, then it will use that matcher to check the argument.

Wildcard Constraint

The wildcard constraint will match any argument null or otherwise. It is the , i.e. 1 * subscriber.receive().

There is also the spread wildcard constraint *_ which matches any number of arguments 1 * subscriber.receive(*_) including none.

Code Constraint

The code constraint is the most versatile of all. It is a groovy closure that gets the argument as its parameter.

The closure is treated as an condition block, so it behaves like a then block, i.e., every line is treated as an implicit assertion.

It can emulate all but the spread wildcard constraint, however it is suggested to use the simpler constraints where possible.

You can do multiple assertions, call methods for assertions, or use with/verifyAll.

1 * list.add({

verifyAll(it, Person) {

firstname == 'William'

lastname == 'Kirk'

age == 45

}

})Negating Constraint

The negating constraint ! is a compound constraint, i.e. it needs to be combined with another constraint to work.

It inverts the result of the nested constraint, e.g, 1 * subscriber.receive(!null) is the combination of

an equality constraint checking for null and then the negating constraint inverting the result, turning it into not null.

Although it can be combined with any other constraint it does not always make sense, e.g., 1 * subscriber.receive(!_) will match nothing.

Also keep in mind that the diagnostics for a non matching negating constraint will just be that the inner

constraint did match, without any more information.

Type Constraint

The type constraint checks for the type/class of the argument, like the negating constraint it is also a compound constraint.

It usually written as _ as Type, which is a combination of the wildcard constraint and the type constraint.

You can combined it with other constraints as well, 1 * subscriber.receive({ it.contains('foo')} as String) will assert that it is

a String before executing the code constraint to check if it contains foo.

Matching Any Method Call

Sometimes it can be useful to match "anything", in some sense of the word:

1 * subscriber._(*_) // any method on subscriber, with any argument list

1 * subscriber._ // shortcut for and preferred over the above

1 * _._ // any method call on any mock object

1 * _ // shortcut for and preferred over the above|

Note

|

Although (_.._) * _._(*_) >> _ is a valid interaction declaration,

it is neither good style nor particularly useful.

|

Strict Mocking

Now, when would matching any method call be useful? A good example is strict mocking, a style of mocking where no interactions other than those explicitly declared are allowed:

when:

publisher.publish("hello")

then:

1 * subscriber.receive("hello") // demand one 'receive' call on 'subscriber'

_ * auditing._ // allow any interaction with 'auditing'

0 * _ // don't allow any other interaction0 * only makes sense as the last interaction of a then: block or method. Note the

use of _ * (any number of calls), which allows any interaction with the auditing component.

|

Note

|

_ * is only meaningful in the context of strict mocking. In particular, it is never necessary

when Stubbing an invocation. For example, _ * auditing.record() >> "ok"

can (and should!) be simplified to auditing.record() >> "ok".

|

Where to Declare Interactions

So far, we declared all our interactions in a then: block. This often results in a spec that reads naturally.

However, it is also permissible to put interactions anywhere before the when: block that is supposed to satisfy

them. In particular, this means that interactions can be declared in a setup method. Interactions can also be

declared in any "helper" instance method of the same specification class.

When an invocation on a mock object occurs, it is matched against interactions in the interactions' declared order.

If an invocation matches multiple interactions, the earliest declared interaction that hasn’t reached its upper

invocation limit will win. There is one exception to this rule: Interactions declared in a then: block are

matched against before any other interactions. This allows to override interactions declared in, say, a setup

method with interactions declared in a then: block.

Declaring Interactions at Mock Creation Time

If a mock has a set of "base" interactions that don’t vary, they can be declared right at mock creation time:

Subscriber subscriber = Mock {

1 * receive("hello")

1 * receive("goodbye")

}This feature is particularly attractive for Stubbing and with dedicated Stubs. Note that the interactions don’t (and cannot [4]) have a target constraint; it’s clear from the context which mock object they belong to.

Interactions can also be declared when initializing an instance field with a mock:

class MySpec extends Specification {

Subscriber subscriber = Mock {

1 * receive("hello")

1 * receive("goodbye")

}

}Grouping Interactions with Same Target

Interactions sharing the same target can be grouped in a Specification.with block. Similar to

Declaring Interactions at Mock Creation Time, this makes it unnecessary

to repeat the target constraint:

with(subscriber) {

1 * receive("hello")

1 * receive("goodbye")

}A with block can also be used for grouping conditions with the same target.

Mixing Interactions and Conditions

A then: block may contain both interactions and conditions. Although not strictly required, it is customary

to declare interactions before conditions:

when:

publisher.send("hello")

then:

1 * subscriber.receive("hello")

publisher.messageCount == 1Read out aloud: "When the publisher sends a 'hello' message, then the subscriber should receive the message exactly once, and the publisher’s message count should be one."

Explicit Interaction Blocks

Internally, Spock must have full information about expected interactions before they take place.

So how is it possible for interactions to be declared in a then: block?

The answer is that under the hood, Spock moves interactions declared in a then: block to immediately

before the preceding when: block. In most cases this works out just fine, but sometimes it can lead to problems:

when:

publisher.send("hello")

then:

def message = "hello"

1 * subscriber.receive(message)Here we have introduced a variable for the expected argument. (Likewise, we could have introduced a variable

for the cardinality.) However, Spock isn’t smart enough (huh?) to tell that the interaction is intrinsically

linked to the variable declaration. Hence it will just move the interaction, which will cause a

MissingPropertyException at runtime.

One way to solve this problem is to move (at least) the variable declaration to before the when:

block. (Fans of Data Driven Testing might move the variable into a where: block.)

In our example, this would have the added benefit that we could use the same variable for sending the message.

Another solution is to be explicit about the fact that variable declaration and interaction belong together:

when:

publisher.send("hello")

then:

interaction {

def message = "hello"

1 * subscriber.receive(message)

}Since an MockingApi.interaction block is always moved in its entirety, the code now works as intended.

Scope of Interactions

Interactions declared in a then: block are scoped to the preceding when: block:

when:

publisher.send("message1")

then:

1 * subscriber.receive("message1")

when:

publisher.send("message2")

then:

1 * subscriber.receive("message2")This makes sure that subscriber receives "message1" during execution of the first when: block,

and "message2" during execution of the second when: block.

Interactions declared outside a then: block are active from their declaration until the end of the

containing feature method.

Interactions are always scoped to a particular feature method. Hence they cannot be declared in a static method,

setupSpec method, or cleanupSpec method. Likewise, mock objects should not be stored in static or @Shared

fields.

Verification of Interactions

There are two main ways in which a mock-based test can fail: An interaction can match more invocations than

allowed, or it can match fewer invocations than required. The former case is detected right when the invocation

happens, and causes a TooManyInvocationsError:

Too many invocations for: 2 * subscriber.receive(_) (3 invocations)

To make it easier to diagnose why too many invocations matched, Spock will show all invocations matching the interaction in question:

Matching invocations (ordered by last occurrence):

2 * subscriber.receive("hello") <-- this triggered the error

1 * subscriber.receive("goodbye")

According to this output, one of the receive("hello") calls triggered the TooManyInvocationsError.

Note that because indistinguishable calls like the two invocations of subscriber.receive("hello") are aggregated

into a single line of output, the first receive("hello") may well have occurred before the receive("goodbye").

The second case (fewer invocations than required) can only be detected once execution of the when block has completed.

(Until then, further invocations may still occur.) It causes a TooFewInvocationsError:

Too few invocations for:

1 * subscriber.receive("hello") (0 invocations)

Note that it doesn’t matter whether the method was not called at all, the same method was called with different arguments,

the same method was called on a different mock object, or a different method was called "instead" of this one;

in either case, a TooFewInvocationsError error will occur.

To make it easier to diagnose what happened "instead" of a missing invocation, Spock will show all invocations that didn’t match any interaction, ordered by their similarity with the interaction in question. In particular, invocations that match everything but the interaction’s arguments will be shown first:

Unmatched invocations (ordered by similarity):

1 * subscriber.receive("goodbye")

1 * subscriber2.receive("hello")Invocation Order

Often, the exact method invocation order isn’t relevant and may change over time. To avoid over-specification, Spock defaults to allowing any invocation order, provided that the specified interactions are eventually satisfied:

then:

2 * subscriber.receive("hello")

1 * subscriber.receive("goodbye")Here, any of the invocation sequences "hello" "hello" "goodbye", "hello" "goodbye" "hello", and

"goodbye" "hello" "hello" will satisfy the specified interactions.

In those cases where invocation order matters, you can impose an order by splitting up interactions into

multiple then: blocks:

then:

2 * subscriber.receive("hello")

then:

1 * subscriber.receive("goodbye")Now Spock will verify that both "hello"'s are received before the "goodbye".

In other words, invocation order is enforced between but not within then: blocks.

|

Note

|

Splitting up a then: block with and: does not impose any ordering, as and:

is only meant for documentation purposes and doesn’t carry any semantics.

|

Mocking Classes

Besides interfaces, Spock also supports mocking of classes. Mocking classes works

just like mocking interfaces; the only additional requirement is to put byte-buddy 1.9+ or

cglib-nodep 3.2.0+ on the class path.

When using for example

-

normal

Mocks orStubs or -

Spys that are configured withuseObjenesis: trueor -

Spys that spy on a concrete instance likeSpy(myInstance)

it is also necessary to put objenesis 3.0+ on the class path, except for classes with accessible

no-arg constructor or configured constructorArgs unless the constructor call should not be done,

for example to avoid unwanted side effects.

If either of these libraries is missing from the class path, Spock will gently let you know.

Stubbing

Stubbing is the act of making collaborators respond to method calls in a certain way. When stubbing a method, you don’t care if and how many times the method is going to be called; you just want it to return some value, or perform some side effect, whenever it gets called.

For the sake of the following examples, let’s modify the Subscriber's receive method

to return a status code that tells if the subscriber was able to process a message:

interface Subscriber {

String receive(String message)

}Now, let’s make the receive method return "ok" on every invocation:

subscriber.receive(_) >> "ok"Read out aloud: "Whenever the subscriber receives a message, make it respond with 'ok'."

Compared to a mocked interaction, a stubbed interaction has no cardinality on the left end, but adds a response generator on the right end:

subscriber.receive(_) >> "ok" | | | | | | | response generator | | argument constraint | method constraint target constraint

A stubbed interaction can be declared in the usual places: either inside a then: block, or anywhere before a

when: block. (See Where to Declare Interactions for the details.) If a mock object is only used for stubbing,

it’s common to declare interactions at mock creation time or in a

given: block.

Returning Fixed Values

We have already seen the use of the right-shift (>>) operator to return a fixed value:

subscriber.receive(_) >> "ok"To return different values for different invocations, use multiple interactions:

subscriber.receive("message1") >> "ok"

subscriber.receive("message2") >> "fail"This will return "ok" whenever "message1" is received, and "fail" whenever

"message2" is received. There is no limit as to which values can be returned, provided they are

compatible with the method’s declared return type.

Returning Sequences of Values

To return different values on successive invocations, use the triple-right-shift (>>>) operator:

subscriber.receive(_) >>> ["ok", "error", "error", "ok"]This will return "ok" for the first invocation, "error" for the second and third invocation,

and "ok" for all remaining invocations. The right-hand side must be a value that Groovy knows how to iterate over;

in this example, we’ve used a plain list.

Computing Return Values

To compute a return value based on the method’s argument, use the the right-shift (>>) operator together with a closure.

If the closure declares a single untyped parameter, it gets passed the method’s argument list:

subscriber.receive(_) >> { args -> args[0].size() > 3 ? "ok" : "fail" }Here "ok" gets returned if the message is more than three characters long, and "fail" otherwise.

In most cases it would be more convenient to have direct access to the method’s arguments. If the closure declares more than one parameter or a single typed parameter, method arguments will be mapped one-by-one to closure parameters:[5]

subscriber.receive(_) >> { String message -> message.size() > 3 ? "ok" : "fail" }This response generator behaves the same as the previous one, but is arguably more readable.

If you find yourself in need of more information about a method invocation than its arguments, have a look at

org.spockframework.mock.IMockInvocation. All methods declared in this interface are available inside the closure,

without a need to prefix them. (In Groovy terminology, the closure delegates to an instance of IMockInvocation.)

Performing Side Effects

Sometimes you may want to do more than just computing a return value. A typical example is throwing an exception. Again, closures come to the rescue:

subscriber.receive(_) >> { throw new InternalError("ouch") }Of course, the closure can contain more code, for example a println statement. It

will get executed every time an incoming invocation matches the interaction.

Chaining Method Responses

Method responses can be chained:

subscriber.receive(_) >>> ["ok", "fail", "ok"] >> { throw new InternalError() } >> "ok"This will return "ok", "fail", "ok" for the first three invocations, throw InternalError

for the fourth invocations, and return ok for any further invocation.

Returning a default response

If you don’t really care what you return, but you must return a non-null value, you can use _.

This will use the same logic to compute a response as Stub (see Stubs), so it is only really useful for Mock and Spy instances.

subscriber.receive(_) >> _You can of course use this with chaining as well. Here it might be useful for Stub instances as well.

subscriber.receive(_) >>> ["ok", "fail"] >> _ >> "ok"An application of this is to let a Mock behave like a Stub, but still be able to do assertions.

The default response will return the mock itself, if the return type of the method is assignable from the mock type (excluding object).

This is useful when dealing with fluent APIs, like builders, which are otherwise really painful to mock.

given:

ThingBuilder builder = Mock() {

_ >> _

}

when:

Thing thing = builder

.id("id-42")

.name("spock")

.weight(100)

.build()

then:

1 * builder.build() >> new Thing(id: 'id-1337')

thing.id == 'id-1337'The _ >> _ instructs the mock to return the default response for all interactions.

However, interactions defined in the then block will have precedence over the interactions defined in the given block,

this lets us override and assert the interaction we actually care about.

Combining Mocking and Stubbing

Mocking and stubbing go hand-in-hand:

1 * subscriber.receive("message1") >> "ok"

1 * subscriber.receive("message2") >> "fail"When mocking and stubbing the same method call, they have to happen in the same interaction. In particular, the following Mockito-style splitting of stubbing and mocking into two separate statements will not work:

given:

subscriber.receive("message1") >> "ok"

when:

publisher.send("message1")

then:

1 * subscriber.receive("message1")As explained in Where to Declare Interactions, the receive call will first get matched against

the interaction in the then: block. Since that interaction doesn’t specify a response, the default

value for the method’s return type (null in this case) will be returned. (This is just another

facet of Spock’s lenient approach to mocking.). Hence, the interaction in the given: block will never

get a chance to match.

|

Note

|

Mocking and stubbing of the same method call has to happen in the same interaction. |

Other Kinds of Mock Objects

So far, we have created mock objects with the MockingApi.Mock method. Aside from

this method, the MockingApi class provides a couple of other factory methods for creating

more specialized kinds of mock objects.

Stubs

A stub is created with the MockingApi.Stub factory method:

Subscriber subscriber = Stub()Whereas a mock can be used both for stubbing and mocking, a stub can only be used for stubbing. Limiting a collaborator to a stub communicates its role to the readers of the specification.

|

Note

|

If a stub invocation matches a mandatory interaction (like 1 * foo.bar()), an InvalidSpecException is thrown.

|

Like a mock, a stub allows unexpected invocations. However, the values returned by a stub in such cases are more ambitious:

-

For primitive types, the primitive type’s default value is returned.

-

For non-primitive numerical values (such as

BigDecimal), zero is returned. -

If the value is assignable from the stub instance, then the instance is returned (e.g. builder pattern)

-

For non-numerical values, an "empty" or "dummy" object is returned. This could mean an empty String, an empty collection, an object constructed from its default constructor, or another stub returning default values. See class

org.spockframework.mock.EmptyOrDummyResponsefor the details.

|

Note

|

If the response type of the method is a final class or if it requires a class-mocking library and cglib or ByteBuddy

are not available, then the "dummy" object creation will fail with a CannotCreateMockException.

|

A stub often has a fixed set of interactions, which makes declaring interactions at mock creation time particularly attractive:

Subscriber subscriber = Stub {

receive("message1") >> "ok"

receive("message2") >> "fail"

}Spies

(Think twice before using this feature. It might be better to change the design of the code under specification.)

A spy is created with the MockingApi.Spy factory method:

SubscriberImpl subscriber = Spy(constructorArgs: ["Fred"])A spy is always based on a real object. Hence you must provide a class type rather than an interface type, along with any constructor arguments for the type. If no constructor arguments are provided, the type’s no-arg constructor will be used.

If the given constructor arguments lead to an ambiguity, you can cast the constructor

arguments as usual using as or Java-style cast. If the testee for example has

one constructor with a String parameter and one with a Pattern parameter

and you want null as constructorArg:

SubscriberImpl subscriber = Spy(constructorArgs: [null as String])

SubscriberImpl subscriber2 = Spy(constructorArgs: [(Pattern) null])You may also create a spy from an instantiated object. This may be useful in cases where you do not have full control over the instantiation of types you are interested in spying. (For example when testing within a Dependency Injection framework such as Spring or Guice.)

Method calls on a spy are automatically delegated to the real object. Likewise, values returned from the real object’s methods are passed back to the caller via the spy.

After creating a spy, you can listen in on the conversation between the caller and the real object underlying the spy: